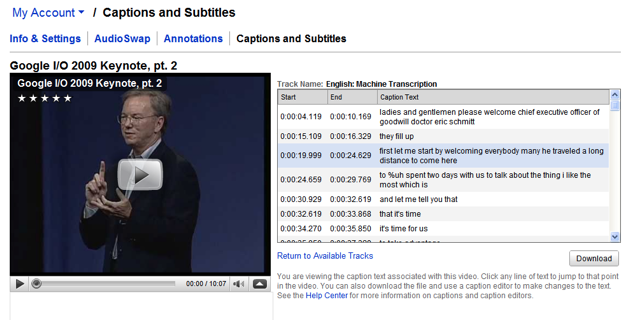

Google announced on the first week of May 2010 that it had released YouTube video transcription services. Although this was released in 2009 the beta version of the You Tube video transcription was only available to a few selected Government agencies, New Broadcasters and universities.

The history of technology that deals with speech recognition can be traced back to the 1930’s. This was a time when AT&T Bell Laboratories came up with a primitive device that was used to recognize speech. Researchers were aware that the extensive use of speech recognition would have to depend on ability to consistently and accurately perceive complex and subtle verbal input. Advancements in speech recognition were very slow because the computing technology that was used was not very good.

The capabilities of numerous electronic devices have outdone the most expensive and best technologies developed in the 1930’s, 50 years later. This was mostly due to breakthroughs made in semiconductor fabrications and in chip. Computer speed and power which were mainly the barriers were no longer an issue to the accuracy and speed of speech recognition.

Programmers were able to develop algorithms and decode and code a number of voice patterns with more computing power than that which was used in the 1930’s. This meant that they could build a database of various voice patterns and convert them to digital sine waves thus analyze the words that are based on the voice pattern signals. As speech to text know-how improves over the years, they became simpler to use thus a number of companies like Google Voice, Microsoft (Vista, XP), Dragon Dictation and other niche organizations started offering the services to their clients.

The question to ask here is the reliability of the technologies particularly through Google YouTube transcription and will they ever take over the human accuracy?

Individuals who watch YouTube clips with captions may have noticed that the accuracy has improved over the past few months. This is set to go on as time goes by. This has been seconded by the CEO of Google Inc Eric Schmidt who says that it is a self learning technology which will improve over time.

Some of the flaws however that have been observed include:

- Accuracy captioning is only possible when the speaker is clear and distinct.

- The environment has to be silent

- Errors can occur because of similar words

- Interjections

- Psychological satisfaction is the major downfall as the material transcribed has to be proof read several times to be sure of accuracy.

It therefore means that humans are still the better option when it comes to transcription.